Karel Bourgois has been part of TADSummit and TADHack since the beginning. He was the first of us to point out the transformer model had changed the rules in AI.

His presentation covers the latest in Voxist, but provides an excellent timeline on the development of AI. He begins with some McKinsey material. This year McKinsey produced some really bad analysis on network APIs. Their lack of knowledge in the API space, coupled with their corporate arrogance, created a report of epic numbers and epic laughs. Whenever a McKinsey chart is now used, the same hilarity ensues.

AI as a field of study that began at the Dartmouth Summer Research Project in 1956. From there the period of ’50s-’70s research in AI blossomed. But lack of progress into applications resulted in an AI Winter from ’70s-’80s. But then an AI Renaissance occurred in the ’90s-’00s as machine learning showed promise.

In ’10s Deep Learning showed continues promise, and with the transformer model we had the leap forward to GPT-3. Within one year of GPT-3’s launch, AI was talked about everywhere.

Here’s the video Karel was unable to show. It’s the pre-launch teaser for General Magic’s Portico (1996), the first virtual assistant (her name was Mary) that could fully interact with your calendar, emails, calls, voicemail, web, weather, stocks, etc.

In 1992 Wildfire was founded, and bought in 2000 by Orange for $142M. The point is voice agents existed in the mid-90s, well before Siri (2011) and Alexa (2013). But the challenge of voice agents is viable use cases in the real world. In my home, for Alexa it’s a timer for cooking or when you need a reminder. It still sucks for many other use cases.

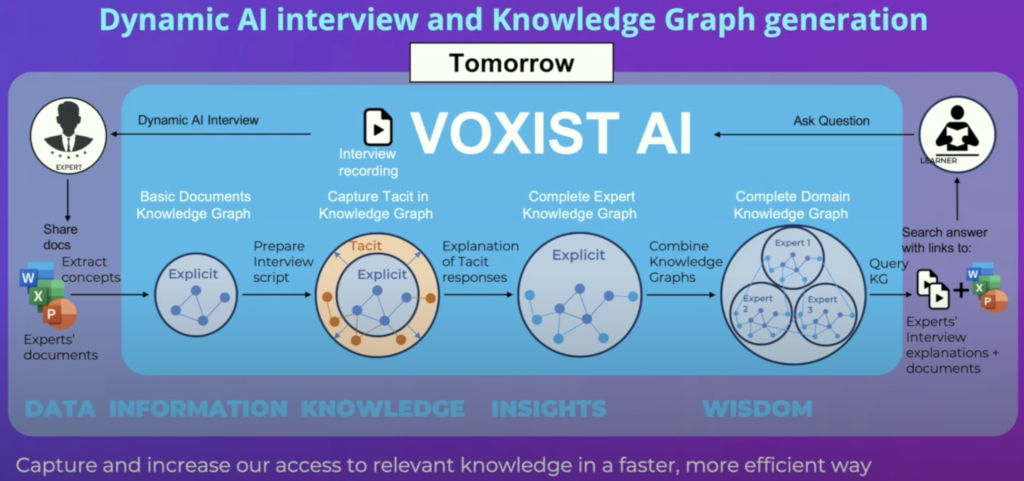

Voxist exists to put an end to the saying, “words fly away, writing remains”, in the enterprise. Within an enterprise only 10% of knowledge is explicit, the rest is implicit (tacit). That’s why getting up to speed in a job can take years. Given the retirement of baby boomers, tens of thousands to years of experience are being lost. This is one of the challenges Voxist are fixing.

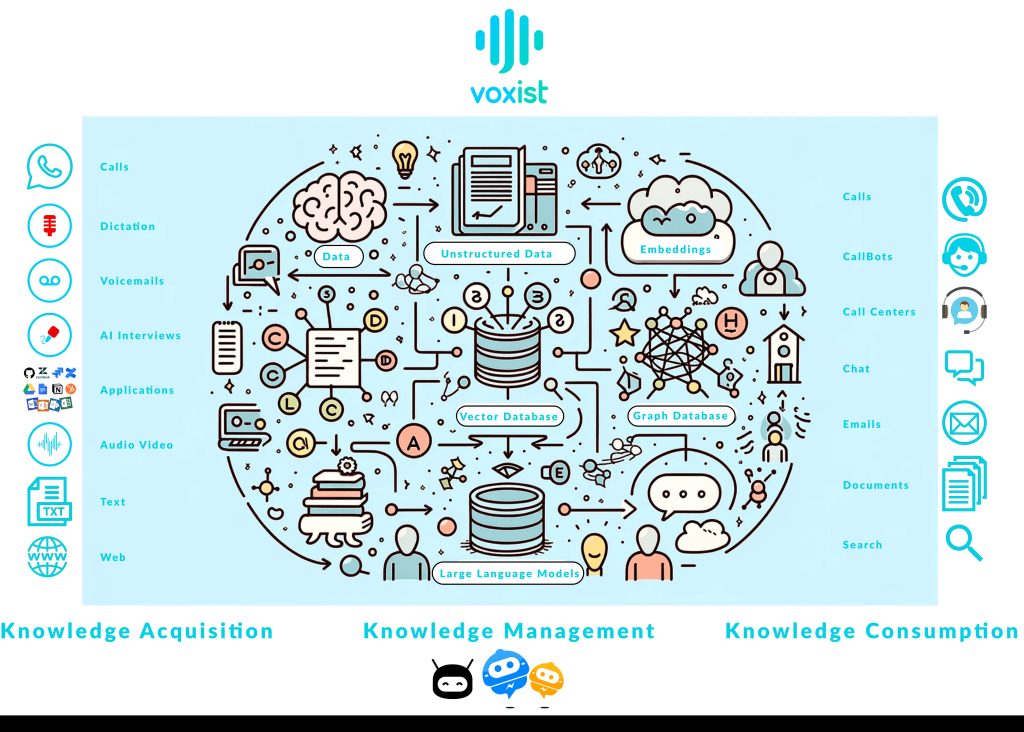

Their platform, see below, transforms conversations in the enterprise into AI powered business insights. Jokingly, Karel positions the platform as implementing vocal RAG (Retrieval-Augmented Generation), before RAG existed. Your own data is important, say you’re a baker, it’s your recipe for a chocolate cake that matters, not a generic recipe contained in the LLM.

The unique thing about Voxist’s platform is its real-time. Karel refers to the Jambonz article, “Text-to-speech latency: the jambonz leaderboard.” All the models have improved over the past year, getting close to 100ms. Customer conversations happening now, can influence current decision making. That can make your business respond in real time.

With their platform Voxist has gathered all the explicit information in an enterprise, and using that to build questions to capture all the tacit knowledge. The challenge is we often don’t know what we know, until someone asks us a question. This enables Voxist to build a complete knowledge graph for an organization, that is specific to that enterprise. Hence the secret sauce remains safe within the organization, not harvested by a large tech company.

Karel shows the continued rapid development with LLMs across MAMBA, ZAMBA, SAMBA, JAMBA (hybrid transformer/MAMBA language model). Which brings us back to RJ Burnham’s comments on whatever we choose at the moment, it’s going to be wrong based on the rapid development of the technology. However, an edge is an edge if it means you cost effectively win more business.

Karel raised the recent development that exposing a model over a long time, the model does begin to improve again. So called grokking. Grokking is a phenomenon in machine learning that describes when a neural network suddenly improves its ability to find patterns in data after extensive training. It’s also known as delayed generalization. Karel is not a believer in AGI (Artificial General Intelligence), but he thinks we still have significant improvements in speed, latency, and understanding to come. Flexibility is key when you’re working in AI.

One thought on “Voxist’s Real Time Enterprise AI, Karel Bourgois, Founder & CEO Voxist”

Comments are closed.