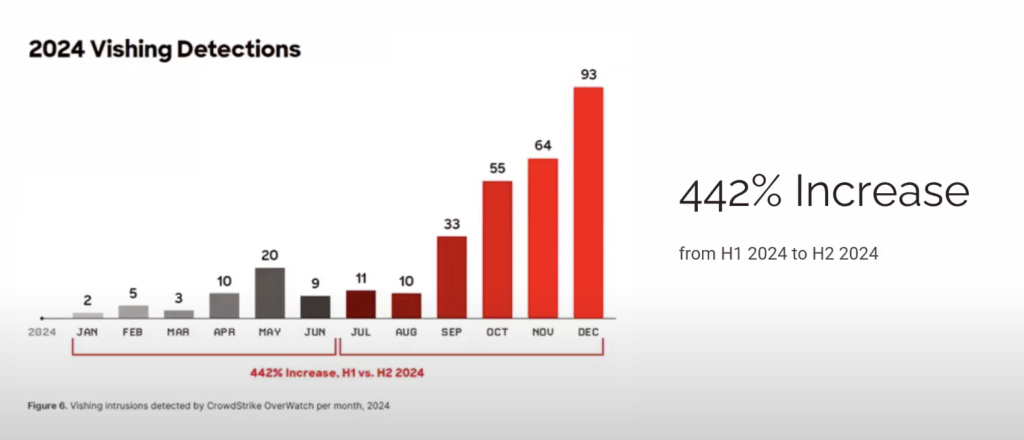

Voice AI attack rise 442%

AI-powered voice attacks are surging, with vishing (voice phishing) attempts increasing 442% in the latter half of 2024. Criminals can now clone the voice of a CEO to demand an urgent wire transfer, making the threat more persuasive than ever. Meanwhile, traditional security training – with its generic videos and quizzes – is stuck in the past, creating a “checkbox culture” where learning lacks depth and is quickly forgotten.

This session explores how to fight AI with AI. Join Enrico Faccioli to learn how to move beyond outdated methods and test your organization against realistic voice threats. Discover how personalized, 1-on-1 AI voice coaching can replace passive videos, building true resilience by letting employees learn through conversation.

Bohdan unfortunately had to leave the call for some time as an air raid siren went off. The Ukraine continues to be subject to sustained drone attacks from Russia. I remain shocked such brutality is allowed to continue without severe sanctions.

I must thank T-Bot, explorer at voiceaispace.com, for the introduction to Enrico. Next week we will hear from the man himself.

Introduction to Vishing

Enrico opens with the vishing (voice phishing) basics. Covering some use cases, and defense strategies. There’s solid commonality to the SKT session of last week, about the importance of culture and the triumvirate of people, process and technology.

The two companies Enrico runs are:

- vishr.ai – Simulate real-world vishing threats with AI-powered voice impersonation and train your team with 1:1 tailored coaching.

- seedata.io – SaaS platform that brings the benefits of deception technology and threat intelligence to your organisation’s security program with minimal integration requirements and operational burden.

- Seedata.io automatically plants deception assets that detect incidents early and provide enriched notifications directly to your security team, enabling a swift and effective response.

- Raised £1.4M from amazing investors and part of Antler LON3 cohort.

Those 2 companies have a common approach in using the technologies of hackers to help entreprises protect themselves. Vishr.ai was founded because of customer demand. Simply vishing is rising rapidly and enterprises are seeking protection from this threat.

About 90% of vishing calls are from humans, with only about 10% from AI, however the growth is driven by AI. And as we discussion last week on the SKT hack, AI enables phishing campaigns to be created quickly, and experimented with in new ways.

Vishing Use Cases

Enrico runs through an example of an urgent call on a Friday afternoon from the CEO needing a cash transfer for a deal. The social engineering is carefully crafted, it works, we see it all the time in successful cyber attacks. In fact, Enrico is working on a social engineering book, more on that once I get the Amazon link.

Last year at Defcon ’24 AI was set against humans and in 22 minutes AI detected 17 AI systems while humans (professional social engineers) detected 12. And none of the targets (regular folks) detected they were talking to a machine and being targeted. We’re at the precipice of a dramatic acceleration in scams. For Defcon ’25 it will be bots versus bots, and Enrico has something to announce there.

Vishing at Scale

The architecture for creating a vishing platform is:

- Data collection: using OSINT, Open Source Intelligence. It refers to the practice of gathering and analyzing publicly available information to produce actionable intelligence. This information is gathered from sources that are freely accessible to the public, such as websites, social media, news articles, and public records.

- Voice cloning. A discussion from Aaron raised why would someone created such a technology that can be used for such nefarious purposes. At the VAPI hackathon, it was used as a father’s day present, to show what they could create. Also its used for fun. But generally its being used to scam people.

- Conversational LLM to execute the dialogue, social engineering, emotions / sense of urgency, etc.

- VoIP system to create spoofed calls.

It is 2025 the above platform now enables vishing at scale:

- Scale – hundreds or thousands of calls in parallel;

- Convincing real / familiar voices given all the content freely available on for example YouTube.

- AI Agent, it’s a script that learns and adapts to achieve the criminal’s objectives;

- Near zero latency, with voice to voice the conversations feel natural, this was only a recent improvement;

- Synthetic background noise so the call sounds like its at the airport; and

- Multilingual, I’m still proud of the Swahili language used at TADHack. But also voices with an accent to fit the social engineering. Its all about making the story so convincing.

Vishing Realities

We discussed the uptick in vishing in Denmark, long ignored by criminals given the small number of people to scam, but now a target given the ability of voice AI agents to speak reasonable Danish . For Australia they too are being targeted by many hackers across Asia, with accented Australian English. The 2024 data shows the trend.

Email phishing is relatively under control, the filters are in place across most organizations. However, the recent improvements in voice AI mean vishing lack such protections, as people often answer the calls. And voice remains powerfully persuasive, as we see in the robocalling cams against the elderly.

It only takes a 30 second sample to clone a voice. Online is so much information about an organization, its people, processes, and voices. What do you do if you can not trust that familiar voice, that appears to know your businesses?

Criminals’ preferred targets are new hires or remote staff. LInkedin is a source of targets with new employee updates. Also vishing does not need to be used for immediate fraud, it could be accessing credentials or sensitive information for a later hack.

It all comes back to the classic social engineering playbook: authority, urgency, and familiarity. So the target does not do a full rational assessment at the moment of atack.

The new trend if using Microsoft Teams, where external actors can call employees. Criminal gang Black Basta, targets an organization with lots of spam email. And uses that as a pretext to call the IT help desk manager of the target firm with the objective of gaining remote access to install ransomware.

Vishing Protections

The 3 principles of people, technology and processes are critical to protecting organizations. vishr.ai begins with people, as voice is the channel into an organization. So they identify people at risk of being scammed in the organization. They then use real world pretexts to test the organization automatically. And can then quantify the vulnerability risks. No manual human controlled assessments, this is voice AI enabled, automated, scalable, and quantified.

The training is AI-powered, engaging, fail friendly, and browser based. The thing that sparked my engagement is we learn from our mistakes. I was once nearly scammed. A friend’s phone was stolen. The criminal read our conversation, and created a pretext for why they needed an Apple Voucher. It nearly worked, I was in the car driving to the supermarket when I thought, I’d better check. And that simple check revealed the scam. That was close!

This is what vishr.ai teaches to its customers, the importance of verify on a second channel:

- be politely paranoid;

- call back on an official number;

- ask for confirmation on a separate channel, for example slack or company email;

- move the conversation from the single channel they contacted you on to something verified. In my case, it was simply I asked the criminal to verify the last time we met.

- They will be grateful for identities being verified, it’s how we do business everyday. Only a criminal will get annoyed, which they did, which was nice.

The bottom line is creating friction for attackers. On the technology side, deep fake detection remains slower than deep fake creation, hence the importance of people and processes in protecting against voice AI scams.

By acting together enterprises can join focused on sharing best techniques and processes, common threats, public denunciations, common protections to raise the costs for criminals through acting together.

Vishr Demo and Discussion

Aaron raised an important cultural issue on training then scaring people if they fail a test. So what happens in that scenario is people to not report the attacks. To err is human, to forgive divine is attributed to Alexander Pope. And given the sophistication of voice AI scams, we must take a more realistic approach. Scams will be more frequent and they will work more often, we’re not machines.

So vishr.ai is creating a framework that enables continuous real world testing, and supporting an organization across people, processes, and technology. Though the deep fake detection may remain trapped on the bleeding edge for the foreseeable future.

Bohdan was able to rejoin us as asked about dAIsy in the UK. The AI agent that time-wasted scammers. While fun, it’s not a great use of resources. Criminals should go to jail. And scammers have vast resources available from the money they steal, it’s in the billions.

Enrico gave a great overview of the vishr dashboard. Just go to vishr to book your free consultation and / or platform access. Its available as a subscription service with usage. Also available as a managed service.

Bohdan wrapped up on a couple of points. In the Ukraine, the carriers require all businesses to register their phone numbers. To limit robo / spam calling / messaging. This needs careful management to keep the approved numbers clean, but is worth tracking. Some carriers have a tendency to allow most calls as they earn revenue.

Viber shows the status of numbers, that is whether it’s been reported for scams. This is definitely interesting for customers in avoiding scams. Bohdan’s final advice is use a dedicated number for all your 2FA, keep it separate from your normal communications phone number. Given all the news on 2FA bypass with Qantas, MFA is considered mandatory in 2025, SMS 2FA is simply too high a risk to be on a phone number linked to you on OSINT.

3 thoughts on “TADSummit online Conference, Cybersecurity Training in the Age of Voice AI, Enrico Faccioli, vishr.ai”