Surbhi Rathore has been a sponsor of TADHack and TADSummit over the years. Symbl.ai has been leading the real-time AI category since the beginning, since 2018. For the passed 5.5 years Symbl helps businesses understand multimodal interactions at scale to grow revenues and improve operations, across voice calls, videos calls, and messaging.

Some of Symbl’s TADHack winners include:

RescueR Autonomous rescue bot with sonar mapping and telecommunications technology to quicken response time of trapped victims. This hack was shortly after the hotel collapse in Florida.

Wizard Chess Play chess with your voice, making it accessible for those with visual impairments or less able motor skills. The code is a basic Node app, running on a server which starts by making a Telnyx call to the conference room, which symbl.ai then joins. From there, anything said into the phone is processed by symbl.ai and passed via websockets to the web page. Computer moves are relayed by voice in the opposite direction to only the human player. (In earlier drafts the voice spoke to everyone, including symbl.ai, but since the moves the computer spoke were invalid for the human, nothing bad happened!)

Colloqui11ly Accessible conferencing solution (using TTS and STT). It makes the conference available to all by allowing some users to interact via text message (both to “hear” the chat, and respond) while others get the audio experience.

Podcast Annotator Hands-free note-taking while listening to a podcast stream. You first start a podcast from the webpage, which initiates a call to your phone. (But it could also be triggered by DTMF tones.) At this point you can listen to the podcast, and say things like “Good point” and “must look that up”. These phrases are transcribed and added to a timeline for later review. Once the podcast ends, this review is sent via SMS.

Custom Call Score

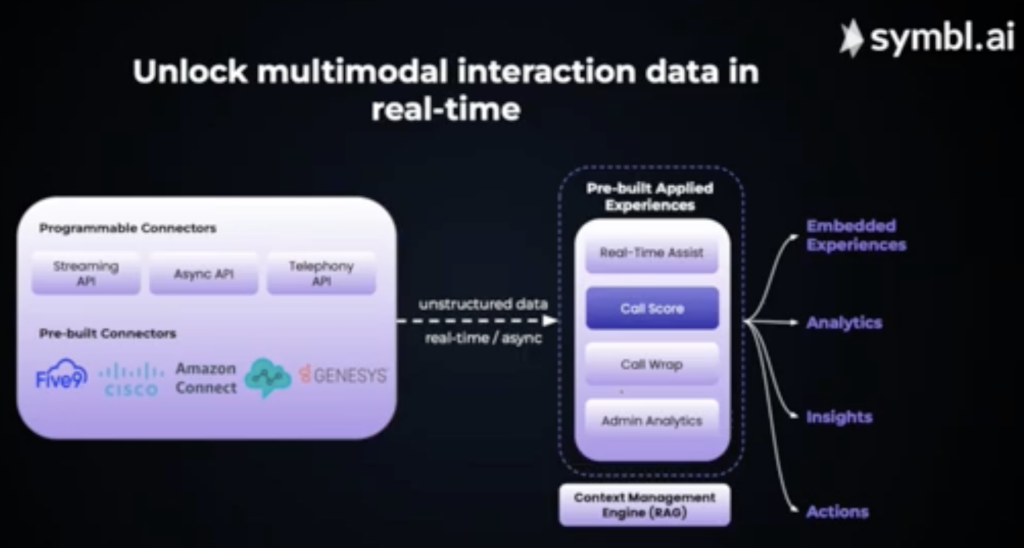

The focus of this session is the new customized scoring features to their Call Score API, delivering unified compliance for human and AI agents.

AI agents are augmenting and in some cases replacing human agents, ensuring quality is key to achieving better customer experiences (CX). There have been multiple incidents of voice agents hallucinating while interacting with customers, thereby negatively impacting customer trust. Call Score provides the qualitative measured to monitor a hybrid workforce.

With ‘Custom Criteria’ & ‘Scorecards’, businesses can define evaluation criteria, build different scoring logic for their human & AI agents and directly integrate call scores into their CRM, BI tools or custom applications – all with a single API.

Surbhi ran through a demo of call score here, focusing on a human agent’s Question Handling, Energy, and Confidence. Call Score produced a report, which can be evaluated against customer feedback for that specific call, so the qualitative analysis can also be quantified across the business’s customers over time.

For the past 18 months Symbl has been building out what they call applied experiences: real-time agent, call score (focus of today), call wrap (focused summary for specific purpose), and analytics.

Call Score enables quality assessment automation, across human, AI agent, and hybrid interactions. Examples are detecting bias or fraud. Specifically, if personal information is being asked for. An agent may show a lack of empathy, Symbl provides the evidence from within in the conversation for the assessment.

The dimensions for the scoring are:

- Custom Criteria – defined in natural language, prioritized checklists of what should be covered in the agent conversation. For example, different regions could have different criteria.

- Scorecards – combine the custom criteria with Symbl’s criteria to deliver an overall assessment for the agent’s performance on that call.

One of Symbl’s customers uses Call Score across sales and support agents in biomedical devices, as one of the criteria is upsell within support. They’ve been able to completely move away from excel spreadsheet quality assessments and fully automate that quality assessment process.

For another customer, a BPO (Business Process Outsourcer) has many customers with many different programs. The custom criteria enable them to accurately measure the performance for different customers and programs. Hence automating quality measurement.

For a human agent feedback is focused on the gaps in performance and necessary coaching. While for an AI agent, feedback is a prompt. We’re certainly living through an interesting time in AI agents.

One thought on “Unified compliance for human and AI agents with Call Score. Surbhi Rathore, CEO & Co-Founder, Symbl.ai”

Comments are closed.